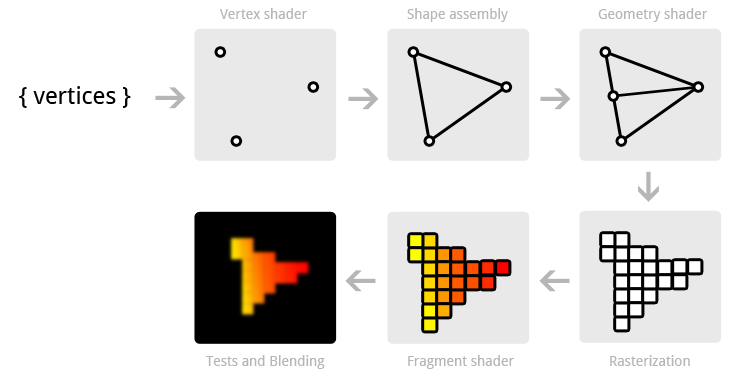

This week, I’m going to go over some simple full-screen post-processing effects and how to achieve them using shaders and frame buffers. If you haven’t yet checked out my post on frame buffers from last week, you might want to read that first. The two effects that I’m going to go over this week are grayscaling and blur.

Before we go over these effects, though, we need to set up some infrastructure for post-processing. We already have a FrameBuffer class to represent the frame buffer object, but we also need a few other things. First, we need a way to draw a texture onto a full-screen quad. So, lets make a few functions that allows us to do that:

// Store these variables wherever they will be accessible to the following functions.

GLuint fullScreenQuadVAO = GL_NONE;

GLuint fullScreenQuadVBO = GL_NONE;

// Initializes the full-screen quad.

void initFullScreenQuad()

{

// We can hard-code the quad data, since we know it will never change and so we don't have to load in an obj for it.

float VBO_DATA[] =

{

-1.0f, -1.0f, 0.0f, 0.0f, 0.0f,

1.0f, -1.0f, 0.0f, 1.0f, 0.0f,

-1.0f, 1.0f, 0.0f, 0.0f, 1.0f,

1.0f, 1.0f, 0.0f, 1.0f, 1.0f,

-1.0f, 1.0f, 0.0f, 0.0f, 1.0f,

1.0f, -1.0f, 0.0f, 1.0f, 0.0f

};

glGenVertexArrays(1, &fullScreenQuadVAO);

glBindVertexArray(fullScreenQuadVAO);

glEnableVertexAttribArray(0); // Vertices

glEnableVertexAttribArray(1); // Texture Coordinates

glGenBuffers(1, &fullScreenQuadVBO);

glBindBuffer(GL_ARRAY_BUFFER, fullScreenQuadVBO);

glBufferData(GL_ARRAY_BUFFER, 30 * sizeof(float), VBO_DATA, GL_STATIC_DRAW);

glVertexAttribPointer((GLuint)0, 3, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (GLvoid*)0); // Vertices

glVertexAttribPointer((GLuint)1, 2, GL_FLOAT, GL_FALSE, 5 * sizeof(float), (GLvoid*)(3 * sizeof(float))); // Texture Coordinates

glBindBuffer(GL_ARRAY_BUFFER, GL_NONE);

glBindVertexArray(GL_NONE);

}

// Draws the full-screen quad to whatever frame buffer is currently bound.

void drawFullScreenQuad()

{

glBindVertexArray(fullScreenQuadVAO);

glDrawArrays(GL_TRIANGLES, 0, 6);

glBindVertexArray(GL_NONE);

}

These methods could be placed into a utilities file, or maybe made as static methods in the class of your choosing; It’s completely up to you. The initFullScreenQuad method would need to be called in your game’s initialization method, or wherever you are initializing your frame buffer(s).

Next, we’re going to need a very simple vertex shader that basically just passes through the quad’s vertex position to gl_Position, and the texture coordinates to the fragment shader:

#version 420

layout (location = 0) in vec3 position;

layout (location = 1) in vec2 uv;

out vec2 texCoord;

void main()

{

texCoord = uv;

gl_Position = vec4(position, 1.0f);

}

This is the vertex shader you will be using for most of your post-processing effects. Now that we have that out of the way, we can talk about the effects we’re going to implement.

Grayscaling

Grayscaling is, like it sounds, the process of removing color from the scene and making it appear completely in grayscale. This is a very simple process, and it is achieved in the fragment shader by outputting a weighted average of the colors, called the luminance. The code looks like this:

#version 420

in vec2 texCoord; // Note that this must be the exact same name as the variable outputted in the vertex shader.

out vec4 color;

uniform sampler2D tex; // The view of the scene, bound as a 2D texture.

void main()

{

// Sample the scene image at the provided texture coordinates.

vec4 source = texture(tex, texCoord);

// Mix the red, green, and blue channels to get the luminance.

// The weights used represent the 'optimal' levels of each channel for the human eye.

float luminance = 0.2989 * source.r + 0.587 * source.g + 0.114 * source.b;

// Output the luminance for each color channel, producing gray.

color = vec4(luminance, luminance, luminance, 1.0f);

}

I’m not going to show the code for it, but for the following code I’m going to assume you have created a shader object called grayscaleShader using the above vertex and fragment shaders, and created and initialized a frame buffer object called mainBuffer (in C++). The following code can then be added to our draw method:

void draw()

{

// ... clear buffers and any other pre-draw operations here.

mainBuffer.bind(); // Causes the mainBuffer to be drawn to instead of the back-buffer.

// Draw your scene as usual here.

mainBuffer.unbind();

grayscaleShader.bind(); // Bind the shader object.

glBindTexture(GL_TEXTURE_2D, mainBuffer.getColorHandle(0)); // Binds the scene (stored in mainBuffer) as a texture for our grayscale shader.

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

grayscaleShader.unbind();

}

Note that since we do not have a frame buffer bound when drawFullScreenQuad is called, it will be drawn to the back-buffer. We could change it so that it is drawn to another frame buffer, which can then be used again for other processing.

Blur

The next effect I will cover this week is blur. Blur is the process of averaging pixels with their neighbors, using weights according to the distance from the original pixel. So, for example, when blurring a given pixel, it will have a certain weight while its direct neighbor will have a slightly less weight. The pixel on the other side of the neighbor will have an even less weight, and so on. The important thing to remember about this is that the weights, when added together, should equal 1. Otherwise, the brightness of the final image will not be the same as the original image. While this process could be done in one shot two-dimensionally, it is a bit easier to instead to it twice, once horizontally and once vertically. Here is the fragment shader code for the horizontal blur:

#version 420

uniform sampler2D uTex; // Source image

uniform float uPixelSize; // Equal to (1.0 / windowWidth)

in vec2 texCoord;

out vec3 outColor;

void main()

{

// Sample pixels in a horizontal row.

// The weights add up to 1.

outColor = vec3(0.0, 0.0, 0.0);

outColor += 0.06 * texture(uTex, vec2(texCoord.x - 4.0 * uPixelSize, texCoord.y)).rgb;

outColor += 0.09 * texture(uTex, vec2(texCoord.x - 3.0 * uPixelSize, texCoord.y)).rgb;

outColor += 0.12 * texture(uTex, vec2(texCoord.x - 2.0 * uPixelSize, texCoord.y)).rgb;

outColor += 0.15 * texture(uTex, vec2(texCoord.x - uPixelSize, texCoord.y)).rgb;

outColor += 0.16 * texture(uTex, vec2(texCoord.x, texCoord.y)).rgb;

outColor += 0.15 * texture(uTex, vec2(texCoord.x + uPixelSize, texCoord.y)).rgb;

outColor += 0.12 * texture(uTex, vec2(texCoord.x + 2.0 * uPixelSize, texCoord.y)).rgb;

outColor += 0.09 * texture(uTex, vec2(texCoord.x + 3.0 * uPixelSize, texCoord.y)).rgb;

outColor += 0.06 * texture(uTex, vec2(texCoord.x + 4.0 * uPixelSize, texCoord.y)).rgb;

}

The shader for the vertical blur is similar, except that we manipulate texCoord.y instead of texCoord.x as above. On the C++ side of things, there are a couple of things that we need for this operation to work. We have to create the shader objects (remember, you need two, one for horizontal and one for vertical), along with three frame buffer objects, the mainBuffer and two extra buffers to apply the blur. When you initialize the color attachment for them, it will look something like:

// extraBuffer1.initColorAttachment(index, width, height, format, filter, wrap);

extraBuffer1.initColorAttachment(0, windowWidth, windowHeight, GL_RGB8, GL_LINEAR, GL_CLAMP_TO_EDGE);

// Note that we are using GL_RGB8 for the format, rather than GL_RGBA8, since we do not care about the alpha channel when blurring.

The two extra frame buffers also don’t need a depth attachment, so we can omit that when initializing them. Once we have all our buffers initialized, we can use the following algorithm to apply the blur to our scene:

void draw()

{

// ... clear buffers and any other pre-draw operations here.

mainBuffer.bind(); // Causes the mainBuffer to be drawn to instead of the back-buffer.

// Draw your scene as usual here.

mainBuffer.unbind();

// First-pass of blur to move data into the two extra buffers.

// Horizontal blur

blurHorizontalShader.bind(); // Bind the horizontal blur shader.

glUniform1f(blurHorizontalShader.pixelSizeLoc, 1.0f / windowWidth); // Send the pixelSize uniform to the shader.

extraBuffer2.bind(); // Draw to extraBuffer2.

glBindTexture(GL_TEXTURE_2D, mainBuffer.getColorHandle(0)); // Bind scene texture from mainBuffer.

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

extraBuffer2.unbind();

blurHorizontalShader.unbind();

// Vertical blur

blurVerticalShader.bind(); // Bind the vertical blur shader.

glUniform1f(blurVerticalShader.pixelSizeLoc, 1.0f / windowHeight);

extraBuffer1.bind(); // Draw to extraBuffer1.

glBindTexture(GL_TEXTURE_2D, extraBuffer2.getColorHandle(0)); // Bind the horizontally blurred scene texture from extraBuffer2.

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

extraBuffer1.unbind();

blurVerticalShader.unbind();

// Here, BLOOM_BLUR_PASSES is the number of additional blurring passes that you want to apply.

// Each pass will make the scene look more and more blurry.

for (int i = 0; i < BLOOM_BLUR_PASSES; i++)

{

// Horizontal

blurHorizontalShader.bind();

glUniform1f(blurHorizontalShader.pixelSizeLoc, 1.0f / window->getSize().x);

extraBuffer2.bind();

glBindTexture(GL_TEXTURE_2D, extraBuffer1.getColorHandle(0));

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

extraBuffer2.unbind();

blurHorizontalShader.unbind();

// Vertical

blurVerticalShader.bind();

glUniform1f(blurVerticalShader.pixelSizeLoc, 1.0f / window->getSize().y);

extraBuffer1.bind();

glBindTexture(GL_TEXTURE_2D, extraBuffer2.getColorHandle(0));

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

extraBuffer1.unbind();

blurVerticalShader.unbind();

}

// The last thing we need to do is move the final image from extraBuffer1 to the back-buffer.

extraBuffer1.moveToBackBuffer(windowWidth, windowHeight);

}

If you only want to use one blur pass, you can remove the for loop, but having it in there is a nice option in case you need a strong blur. Now, what if you wanted to blur the scene and then grayscale it, or vice-versa? Thankfully it’s pretty easy, all you have to do is use the output buffer from one as the input buffer to the other, like so:

// Blur algorithm here, but instead of calling extraBuffer1.moveToBackBuffer(windowWidth, windowHeight)

// we leave the image inside of extraBuffer1.

grayscaleShader.bind(); // Bind the shader object.

glBindTexture(GL_TEXTURE_2D, extraBuffer1.getColorHandle(0)); // Binds the blurred scene (stored in extraBuffer1) as a texture for our grayscale shader.

drawFullScreenQuad();

glBindTexture(GL_TEXTURE_2D, GL_NONE);

grayscaleShader.unbind();

And that’s it. Next week I’m going to talk about another interesting full-screen effect called bloom, and how to implement it.